Vincent Dumoulin, Jonathon Shlens, and Manjunath Kudlur have extended image style transfer by creating a single network which performs more than one stylization of an image. The paper[1] has also been summarized in a Google Research Blog post. The source code and trained models behind the paper are being released here.

The model creates a succinct description of a style. These descriptions can be combined to create new mixtures of styles. Below is a picture of Picabo[5] stylized with a mixture of 3 different styles. Adjust the sliders below the image to create more styles.

What is a pastiche generator?

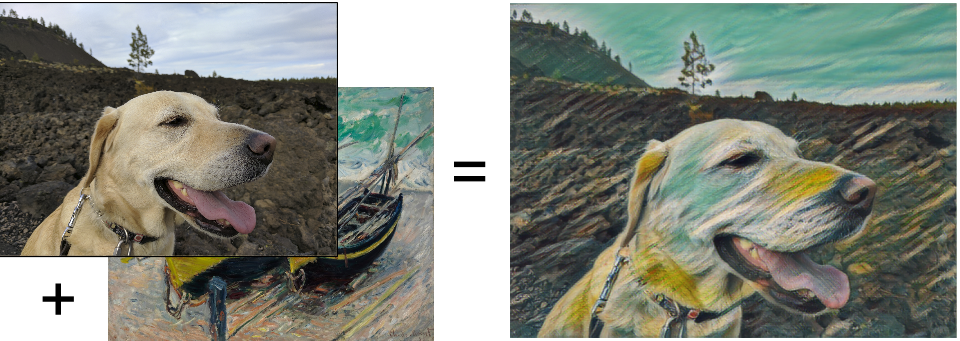

A pastiche is a work of art that emulates another work’s style. Magenta is open sourcing a model that creates a new image which transfers one of several styles from one image to another.

This is similar to work done by Johnson, et al[2]. The pastiche generation is done by a single feed forward network. The network is trained to simultaneously preserve two results taken from an image classification network. VGG-16[4] is the pretrained image classification network that’s used here.

First, the pastiche generator network is preserving the same distribution of signals extracted from the first few layers of the VGG-16 network. That is, when the style image is passed through the image classification network, the first few layers behave in a certain way. The pastiche generation network tries to create the same statistical distribution of outputs in these layers in its generated image that the style image created. This ensures that the brush strokes and pallet used in the generated image looks roughly similar to the style image’s brush strokes and pallet.

Second, the pastiche generator network also requires that the content of the generated network look the same to the VGG-16 network. Rather than requiring that the pixels be similar in the original photo and the generated image, it requires that the image classification network interpret the pixels the same. That is the image classification must have roughly the same outputs in a reasonably deep layer of the VGG-16 network. That means that groups of pixels that are interpreted as the eye of a dog will be interpreted similarly in both images even though it was painted using the same palette and brushes as the style image.

Here is an example of a photo of the same dog that has been stylized to look as though Claude Monet painted the dog instead of painting Three Fishing Boats. As you can see, the model must compromise in order to achieve this.

This diagram shows where these losses are extracted. The distribution of brush strokes is taken out at some of the early layers. The identification of content is extracted at a much deeper layer.

While the pastiche generation network is trained, the VGG-16 network is held constant. For exact details, see the paper.

Extending pastiche generation to multiple styles

Several modifications have been made to the standard style transfer network. The most significant change is to employ a new form of instance normalization[3] called conditional instance normalization in which a unique affine transformation is provided for each artistic style. The parameters governing the affine transformation may be considered an embedding space representation for each painting style. The rest of the network remains the same for all styles.

In addition, the boundary condition around the image is reflected. This reduces the discontinuity at the edge for the generated pastiche.

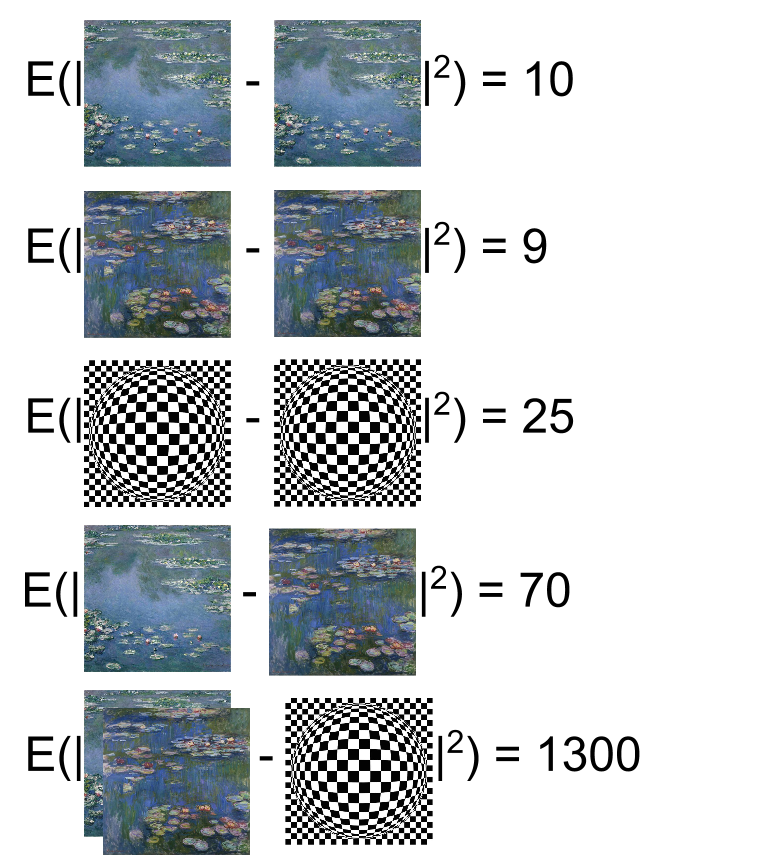

The network is identical for all styles that were trained except for 3k parameters that are specific to a single stylization. It’s possible to treat these weights as an embedding of the style. The network allows us to use linear combinations of multiple style images, for example.

Below are some precomputed combinations of significantly different dramatic styles. Changing the linear combinations of the three embeddings shows that new styles that are artistically different from the original embeddings can be created.

It’s also possible to measure the distance between embeddings to approximate the distance between different painting styles. If a series of embeddings of the same style image are taken, the distances are all small and similar. For example, the L2 distances for two different (but similar) water lillies paintings by Claude Monet are <25. Likewise, the average L2 distance between different water lillies paints is ~70. However, the distance between either of the water lillies paintings and an abstract black and white painting is ~1300. The abstract black and white style can be seen in Style #3 above.

Running the code

Running the code can be done easily using the Magenta Pip package. Directions for this are located on the GitHub site.

The included code can generate new stylized images, train new stylizations based on an existing model, or train a brand new model from scratch.

You may remember a video of realtime style transfer happening on a webcam from the Google Research Blog post. Here’s the video:

The tool on the left allows you to change the style in real time. This tool will be open sourced in the next few days. Currently, it is not available. Watch the GitHub site and Magenta Discuss for updates.

What’s next?

If you try something and it looks good, let us know! The Magenta team has a discussion list at Magenta Discuss and we’ll be interested in seeing what you’ve created.

References

[1] Vincent Dumoulin, Jonathon Shlens, and Manjunath Kudlur. A Learned Representation for Artistic Style (2016).

[2] Johnson, Justin, Alexandre Alahi, and Li Fei-Fei. Perceptual Losses for Real-Time Style Transfer and Super-Resolution (2016).

[3] Dmitry Ulyanov, Andrea Vedaldi, and Victor Lempitsky. Instance Normalization: The Missing Ingredient for Fast Stylization (2016).

[4] Karen Simonyan and Andrew Zisserman. Very Deep Convolutional Networks for Large-Scale Image Recognition (2015).

[5] Picabo, a Google Brain team diabetic alert dog trained by Dogs4Diabetic and bred by Canine Companions for Independence.