Editorial Note: We’re excited to feature a guest blog post by another member of our extended community, Hanoi Hantrakul, whose team recently won the Outside Lands Hackathon by building an interactive application based on NSynth.

Introduction

I’m Hanoi Hantrakul, one of the team members of “mSynth”, a music hack that won this year’s Outside Hacks - the official 24-hour music hackathon to the Outside Lands Music Festival in San Francisco. Our team developed an artist-audience interactive experience where festival goers can collectively control Magenta’s NSynth in real-time by tilting their phones. We built a mobile application in React Native that streams accelerometer data from multiple users in real-time to PubNub’s datastream network. The data is aggregated in the cloud through PubNub Functions, and the average is published in real-time to an artist’s computer running NSynth in Ableton, Max/MSP and a custom python script. When an artist performs using mSynth, the generated sound is the combined result of the audience’s interaction!

Inspiration

NSynth is the coolest development in Digital Signal Processing (DSP) in the last decade: it completely redefines how we think of sound synthesis and ere better to deploy than a music hackathon? When I pitched my idea on using deep learning and neural networks to generate music in a collaborative manner, I got Rodaan Peralta-Rabang, Eric Chen, Sam Samskies and Rohith Madhavan hooked on the idea!

From “N”Synth to “m”Synth

mSynth is short for “Mobile Synth”, the mobile and interactive cousin of Nsynth :) Given we only had 24 hours to put everything together, we knew weren’t going to train any models. Instead, we built our hack around the Max4Live version of NSynth. The key to our success was efficient division of labor. Between the 5 of us, we had knowledge in Fullstack Engineering, Music and Deep Learning. I focused on connecting the Max4Live Nsynth plugin running in Ableton to a python script that subscribed to messages published on the PubNub network. Eric, Rodaan and Rohith teamed up to make the React Native app and user interface. Sam took the lead on creating the serverless architecture that collects and averages accelerometer data from multiple phones. Because of the ease-of-use of all these technologies: Magenta’s open source models, React Native’s familiar javascript syntax and PubNub’s datastream network (I was interning with them at the time), we actually had everything working within 12 hours. We spent the rest of our time polishing the app and perfecting the pitch and musical demo for the judges.

Technical Implementation

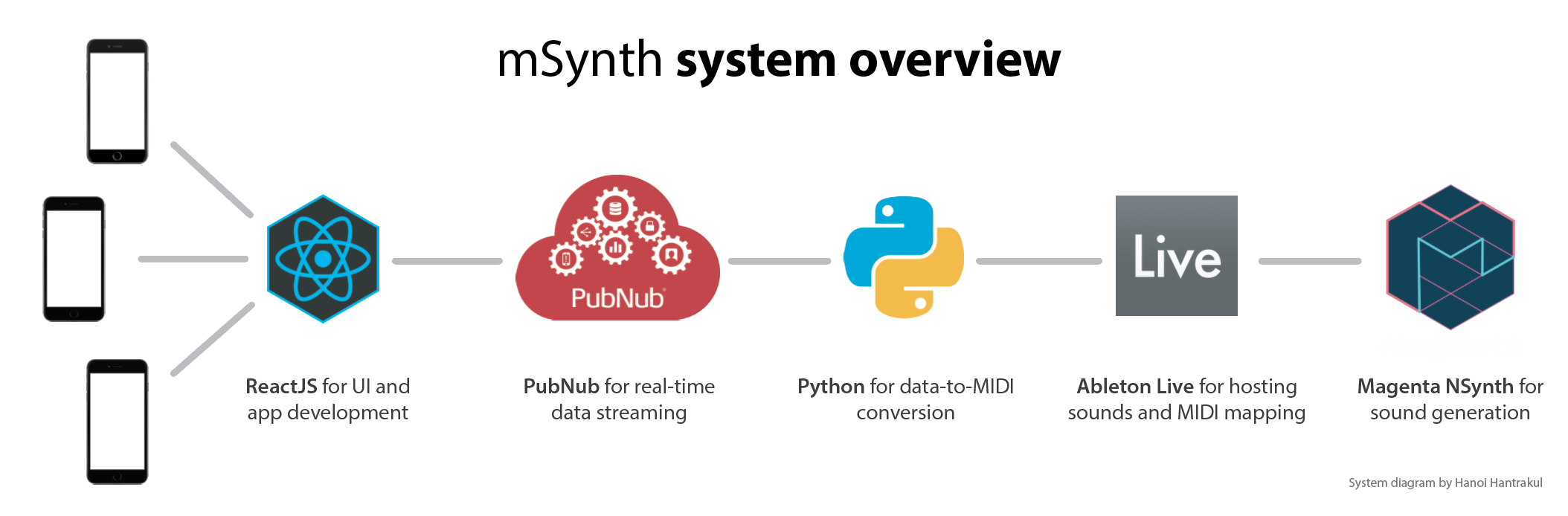

How did we string everything together? This diagram sums up how the different modules interact with one another.

Ableton Live, Max4Live and python:

The Max4Live NSynth plug-in was very easy to set up (you can learn how to here). Normally, you would connect a MIDI controller to the NSynth parameters using Ableton Live’s robust MIDI mapping capabilities, but this process is harder when using systems that do not follow standard MIDI protocol. However, Apple computers feature an internal “IAC Bus” that music hackers like me and many other often use to receive data from DIY devices like Arduinos or custom python scripts. Note that the IAC bus is not enabled by default and needs to be turned on in Audio/MIDI settings. Then, the IAC device must be enabled as a “Track” and “Remote” controller in Ableton Live’s MIDI preferences. I wrote a python script that translates normalized sensor data from PubNub into the appropriate MIDI Control Change (CC) message using the mido python library. When the script is running in the background, any data that is processed through PubNub now controls NSynth using MIDI messages like a standard MIDI controller!

React Native App

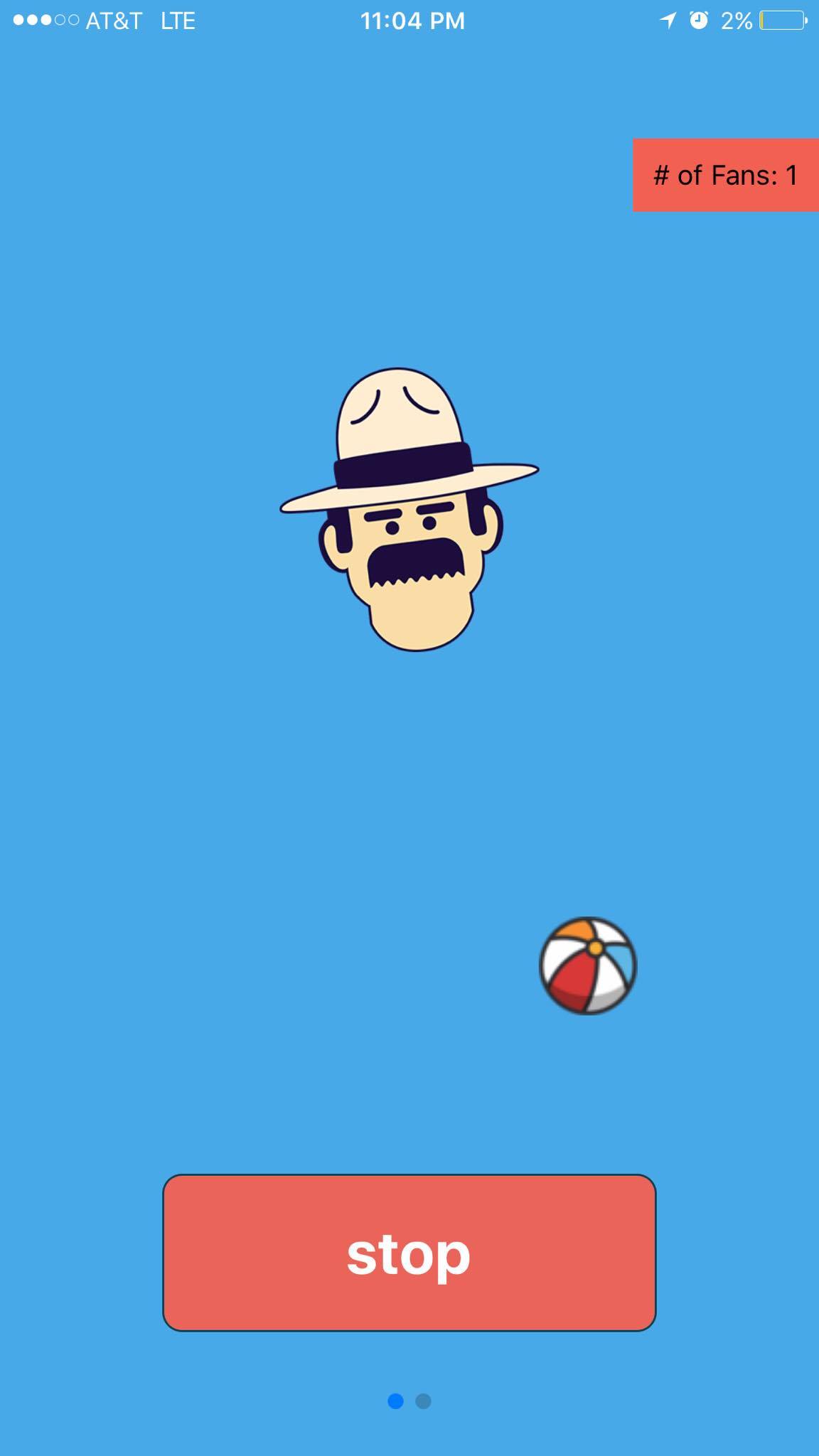

React Native enabled us to get a sleek UI and testable demo very quickly using the familar ReactJS syntax. We built on an existing open source project that leveraged the phone’s sensor data, and were able to quickly iterate through different prototypes without the need to recompile the code after every change like traditional app development. We were also able to deploy to iOS and Android using the same codebase! Integrating PubNub was also a breeze, they support over 80 SDK’s, so we just pull it into our app using the javascript npm package manager.

mSynth UI - the beachball indicates the total aggregate x,y tilt of all connected phones.

PubNub Serverless Architecture

We used PubNub’s javascript and python SDK’s to connect the phones to the artist’s laptop running Nsynth. PubNub has a feature called Functions, where javascript functions are hosted in the cloud to process data being published in the stream. We wrote a PubNub Function that computes a running average from all connected devices and beams this to the artist’s laptop running the aforementioned python script.

The future of music production and performance

I think in the same way Computer Vision was forever changed in 2012 by the successful introduction of Convolutional Neural Networks, audio will forever be changed by WaveNet and NSynth. This exciting new way of analyzing and generating audio marks a paradigm shift; a next step from “traditional” synthesis methods like additive, subtractive, wavetable, granular and concatenative synthesis. In the future, I see commercial music software and hardware synthesizers adopting the NSynth model to generate new and inspiring sounds. Instead of tweaking oscillators and shifting through presets, musicians will be tweaking the source vector in the WaveNet autoencoder and jamming along to unheard-of sounds. It won’t be very long until the “killer plug-in” is powered by some form of machine learning or deep learning. I also see a shift in traditional music production software towards “AI-augmented” workflows, whether it’s more intelligent sample search based on machine learning or even a program like Ableton “listening” to your set and composing complementary basslines and chords. Beyond AI-augmented creativity, I imagine fully-fledged robotic musicians that groove and make music alongside human beings. It is a vision heavily influenced by my thesis committee, Dr. Gil Weinberg at the Georgia Tech Center for Music Technology and recent PhD graduate Dr. Mason Bretan, who advise my research on Deep Reinforcement Learning for robotic musical path planning. I remember resonating with Doug Eck’s comment about how “high dimensional” creative works such as art and music are arguably generated by “low dimensional” embodied movements like the flick of a paintbrush or a musical lick ingrained in muscle memory. I’m very interested by projects that pursue this avenue of research.

AI can also help make music more accessible to musicians and non-musicians alike. As Rodaan pointed out: music has always been changed and inspired by new technology, from the drum machine in the 1970s to modern music production software such like Ableton Live in the 2000s. AI and machine learning will simply become another tool in a musician’s creative process. In the past, one needed extensive practice, the right gear, equipment and musical knowledge to get started. Modern musical tools have since lowered this barrier. As AI becomes more ubiquitous, beginner and seasoned musicians will be able to create new and inspiring music using AI. We hope that through AI and machine learning, artists can focus on the creation of music, rather than be bogged down by the technicalities in making those sounds. With systems like AIVA already registered as a composer, imagine the possibilities when man and machine come together to create art!

Conclusion

It is a very exciting time to be working at the intersection of technology and art. Generative Adverserial Networks are drawing anime, Recurrent Neural Networks and robots are composing music and Convolutional Neural Networks are starting to sound like real human beings! I’m most excited to apply these creative tools to music and arts from different cultures, especially those from my home region. I was born and raised in Thailand and play Thai folk instruments; so anyone up for some RNN’s on traditional melodies from the North of Thailand? :) Please share what you’ve created with the community!