The quality of outputs produced by deep generative models for music have seen a dramatic improvement in the last few years. Most of these models, however, perform in “offline” mode: they can take as long as they’d like before they come up with a melody!

For those of us that perform live music, this is a deal-breaker, as anything making music with us on stage has to be on the beat and in harmony. In addition to this, the generative models available tend to be agnostic to the style of a performer which could make their integration into a live set fairly awkward.

In this post I describe how I have begun exploring what it would take to take out-of-the-box generative musical models and integrate them into live performance. To do this, I make use of two “old” (for today’s standards) Magenta models: DrumsRNN and MelodyRNN.

You can read about the details in my ICCC paper, check out my open-source code for Python, and try it out yourself in my web app.

| 🎹🥁 Web App |

📝 ICCC 2019 Paper |

🐍 Python Code |

You can watch the full video (with audio!) below.

Drums

The system starts off by first building what I refer to as a deterministic drum groove over a bassline the user plays. It’s deterministic because the same bassline will always result in the same groove. The steps proceed as follows:

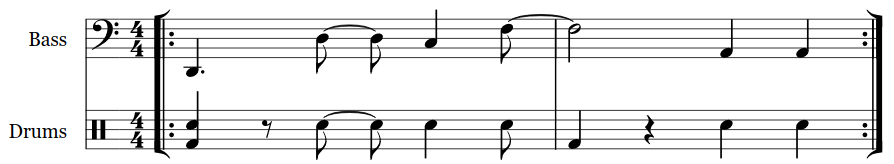

| The user starts off by playing a bassline, for example: | |

|

|

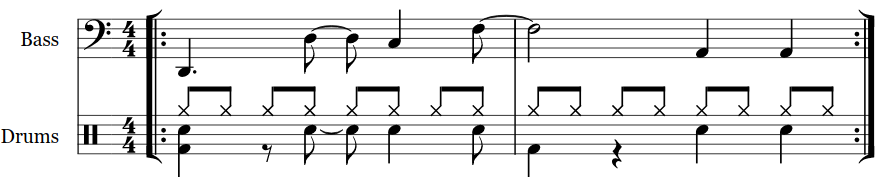

| To build the deterministic drum groove, first add a bass-drum hit on the first beat of each measure: | |

|

|

| Then add a snare hit to match each of the bass notes: | |

|

|

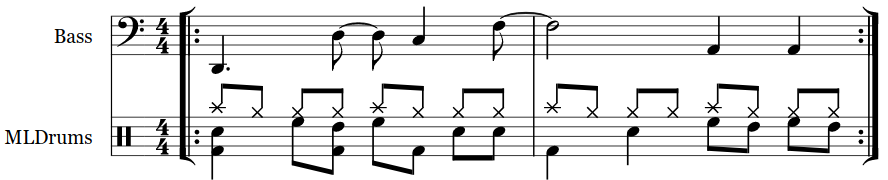

| A hi-hat is added on every 8th note: | |

|

|

| Finally this groove is sent to DrumsRNN, which will produce some new groove: | |

|

|

Depending on the temperature (a.k.a. “craziness” factor) selected when generating the new drum groove, the bassline might not match up nicely. I view this as the machine learning model presenting a creative opportunity for the user to come up with a novel bassline to match the rhythm.

Collaborative melodic improvisation

The melodic improvisation part of this work is inspired by the call-and-response style of improvising which is common in jazz: musicians take turns improvising over some harmonic changes. In my setup, the call-and-response occurs between the user and the models.

As mentioned above, getting a machine learning model to play on beat and be stylistically consistent can be quite challenging. I address these by creating a hybrid improvisation for the “response” part as follows.

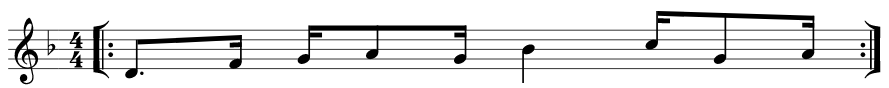

| Maintain a fixed-length buffer (think of it as a small memory bank) where the system stores all the notes the user has played in their last improvisation. For instance, let's say the melody below represents what the user just finished playing: | |

|

|

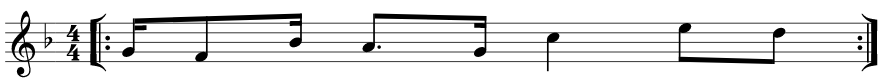

| Once the buffer is full the stored notes get sent to MelodyRNN, which will produce a new melody: | |

|

|

| All the rhythmic information in the new melody is discarded and only the pitches (or note names) are kept. For the example melody above, these pitches would be: | |

| {G, F, Bb, A, G, C, E, D} | |

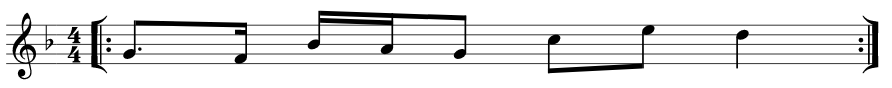

| Now let's say the user continued improvising the following melody: | |

|

|

| The hybrid improvisation is created by keeping the user's rhythm, but replacing the pitches with those produced by the model, resulting in the following melody (notice that the rhythm came from the second user improvisation, while the pitches came from the model): | |

|

|

Putting it all together

Watch me play with the system in the video below:

Why am I doing this?

Expert musicians tend to have a “pocket” where they go when performing live, as they know they can produce high-quality improvisations while in there. However, a common frustration amongst expert improvisers is not being able to leave that comfortable, but somewhat predictable, space (I know I face this frustration regularly!).

This work is exploring the possibility of using machine learning models to push expert musicians out of their comfort zone. Because they are well-trained musicians, they are able to navigate these uncomfortable spaces creatively. My hope is these newly-explored areas can lead to new and exciting rhythms and melodies.