Editorial Note: In August of 2020, Magenta partnered with Gray Area to host BitRate: a month-long series focused on experimenting with the possibilities of Music and Machine Learning. Here is a blog post from one of our top projects, explaining their project in more detail. Learn more about BitRate and view additional submissions on the event page.

Inspiration

I have been learning Bharatanatyam, a type of classical Indian dance, for 12 years. Dance is really important to me and is one of the main passions in my life. Beyond that, however, bharatanatyam is one of the oldest forms of classical Indian dance. This dance form is years of tradition, mythology, and culture passed on from teacher to student over generations before ultimately reaching me. This artform has survived imperialism, racism, fetishization, and oppression before reaching me.

Check out an example of Bharatanatyam

For an artform with such a rich and long history, it’s still relatively unknown, and there’s still a long way to go for this artform’s recovery. Even now, classical Indian dance isn’t given the full respect that a classical dance style like ballet may be given. This is a result of more than a century of intentional suppression and vilification of such artforms, and it will not heal overnight. That being said, awareness and appreciation can go a long way.

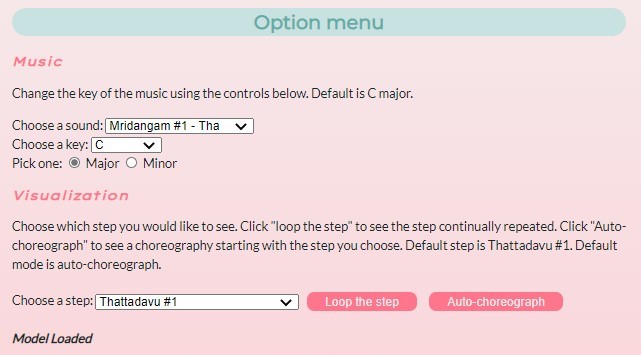

Through Natya*ML, I sought to showcase the beauty of this ancient artform in a way that’s easy and understandable. Magenta’s application of quantized steps to dictate the rhythm of the music drew my attention. The idea that musical rhythm can be broken down into the number of beats per measure is probably familiar to all musicians. This concept also connected with me in the scope of dance. Bharatanatyam combines precise rhythmic movements with expressive gestures to convey both dance and drama. As a long-time dancer, I am familiar with the precision with which dance steps are mathematically arranged within the music. The concept of quantized steps got me thinking of how it can be applied to choreograph a Bharatanatyam dance to music, thus inspiring Natya*ML. I started with the auto-choreograph function and then created the “loop” feature so that an inexperienced viewer can watch each step in greater detail. I created this project for all the other dancers who’ve seen their artform diminished, and for other Indians who treasure their culture, and for everyone with an open mind who is willing to learn.

Project Goals

- Generate Music using the interactive input from the user

- Auto-choreograph function: arrange the dance steps based on the beats of the music

- Visualize the dancer using a silhouette and skeletal rendering

- Collect frame-by-frame visualization information and store it

What it Does

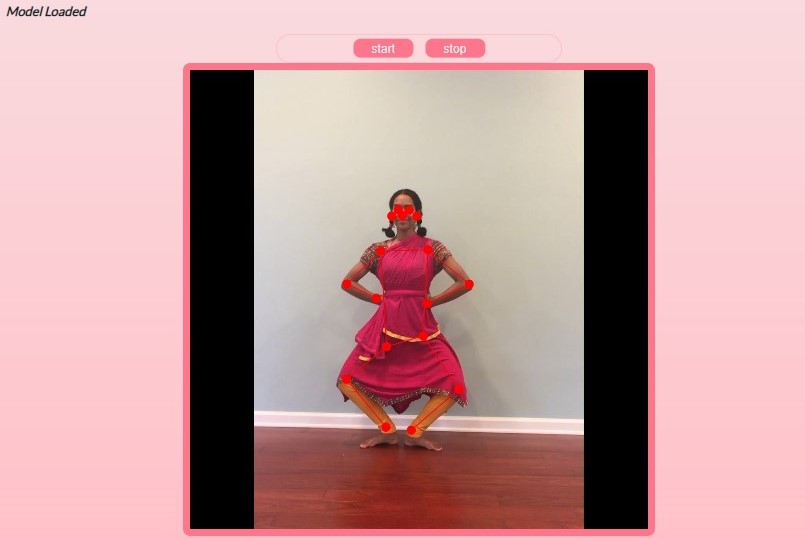

Natya*ML has two parts: music and dance. The music is entirely ML-generated, and the user can pick which sound they would like to hear (mridangam #1, mridangam #2, violin, or harp) and which key they would like their music to be in. For the dance portion, I’ve created a library of 8 steps. The user may choose to loop over any of the steps, essentially viewing each step over and over again. The other option is to auto-choreograph a dance. The steps are chosen to go along with the beats of the music. Regardless of which option is chosen by the user, the canvas displays a recording of each step along with the skeletal rendering.

Technical Implementation

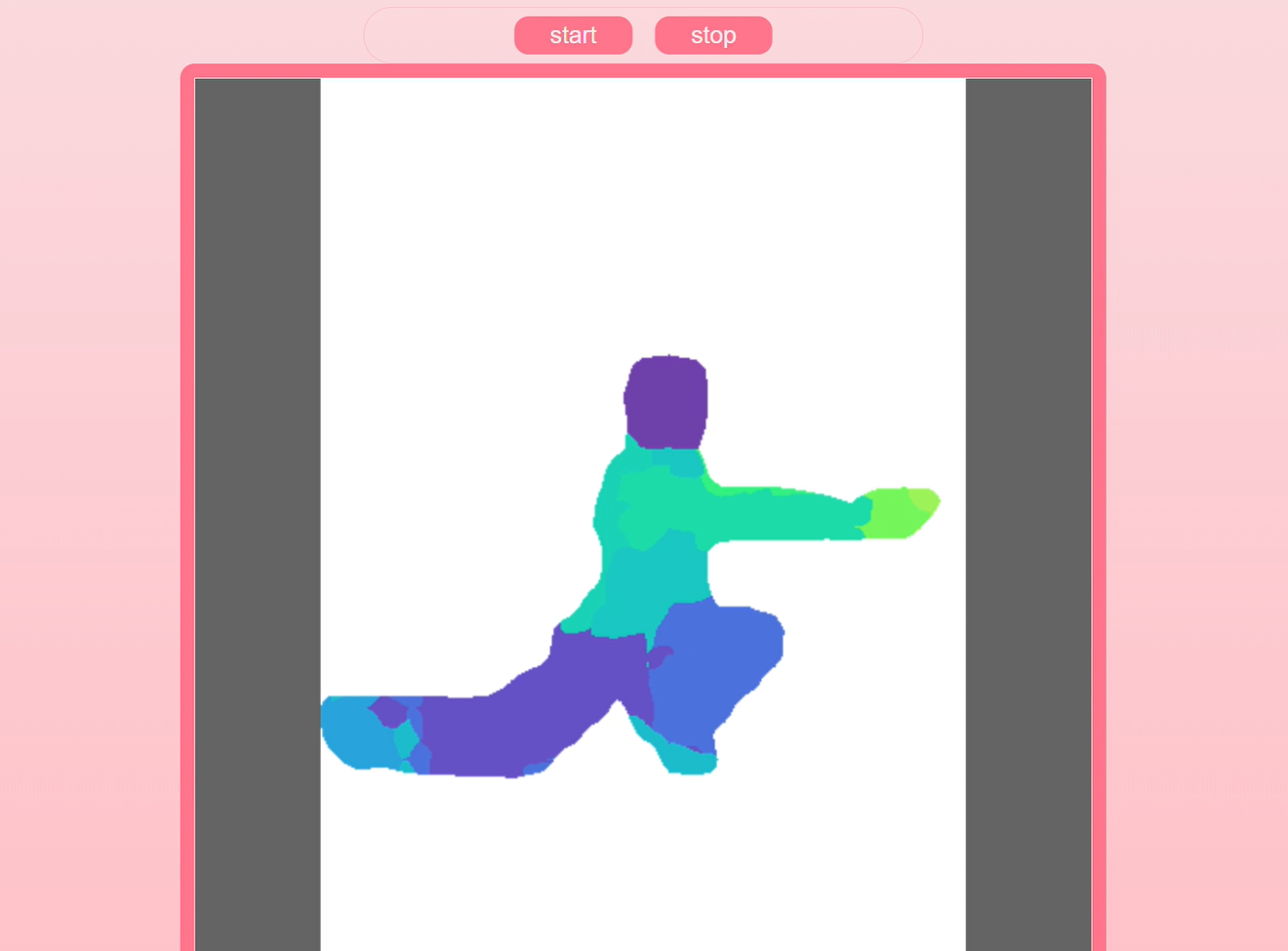

The music was constructed using Tone.js and MusicRNN from Magenta.js. This was created to be interactive for key, mode, and instrument. The auto-choreograph function was then created to map the beats from the music to the steps. This function is not based on machine learning and instead uses mathematics to arrange the dance steps to the music. The skeletal rendering of the dancer was created using PoseNet from ml5.js. I also wrote code to save and process the PoseNet values for each array. The silhouette was created using BodyPix from ml5.js. Unfortunately, when I tried to put PoseNet and BodyPix together, I experienced a lot of lag. For this reason, they currently do not work together.

Challenges

I had just started learning JavaScript this summer, and I had no prior experience with Tone.js, Magenta.js, or ml5.js before this hackathon, so I ran into a lot of challenges. My first challenge was creating the auto-generation of the music. My next challenge was having the generated music vary based on the user’s input. Next, I faced challenges working with PoseNet. It took me a while to get the auto-choreograph working and to collect the PoseNet data. I also then started working with BodyPix from ml5.js. When I tried to put PoseNet and BodyPix together, I experienced a lot of lag. My machine couldn’t handle both of them running at once, and while I would have liked to go farther with that, I was constrained due to this problem.

I am immensely proud of how much I accomplished. Considering that I started the hackathon with no knowledge of Tone.js, Magenta.js, and ml5.js, and very minimal experience with HTML and CSS, I’m so proud that I was able to learn this much and create Natya*ML. I’m also really happy that I was able to combine my 11 years of dancing and 8 years of learning piano with programming to create Natya*ML. I never really considered how much art can come into play with programming.

Next Steps & Future Directions

I want to expand upon the video library of steps. There are about 175 steps in Bharatanatyam. Due to time and resource constraints, the video library does not currently contain all 175 steps. Also, if I were given more resources, I would want to integrate BodyPix and PoseNet to create a rendering of a dancer on a cool background. Improving the graphics is definitely something I’m interested in. Lastly, I would like to expand upon Natya*ML to make it a playable game for users to learn about Bharatanatyam and test their own skills.