Update (01/03/19): Try out the new magic-sketchpad game!

Update (08/02/18): sketch-rnn has been ported to TensorFlow.js under the Magenta.js project! Have a look at the new documentation and code.

Try the sketch-rnn demo.

For mobile users on a cellular data connection: The size of this first demo is around 5 MB of data. Everytime you change the model in the demo, you will use another 5 MB of data.

We made an interactive web experiment that lets you draw together with a recurrent neural network model called sketch-rnn. We taught this neural net to draw by training it on millions of doodles collected from the Quick, Draw! game. Once you start drawing an object, sketch-rnn will come up with many possible ways to continue drawing this object based on where you left off. Try the first demo.

In the above demo, you are instructed to start drawing a particular object. Once you stop doodling, the neural network takes over and attempts to guess the rest of your doodle. You can take over drawing again and continue where you left off. We trained around 100 models you can choose to experiment with, and some models are trained on multiple categories.

Other sketch-rnn Demos

The demos below are best experienced on a desktop browser, rather than on a mobile device.

Multi-Prediction

Multiple Predict Demo

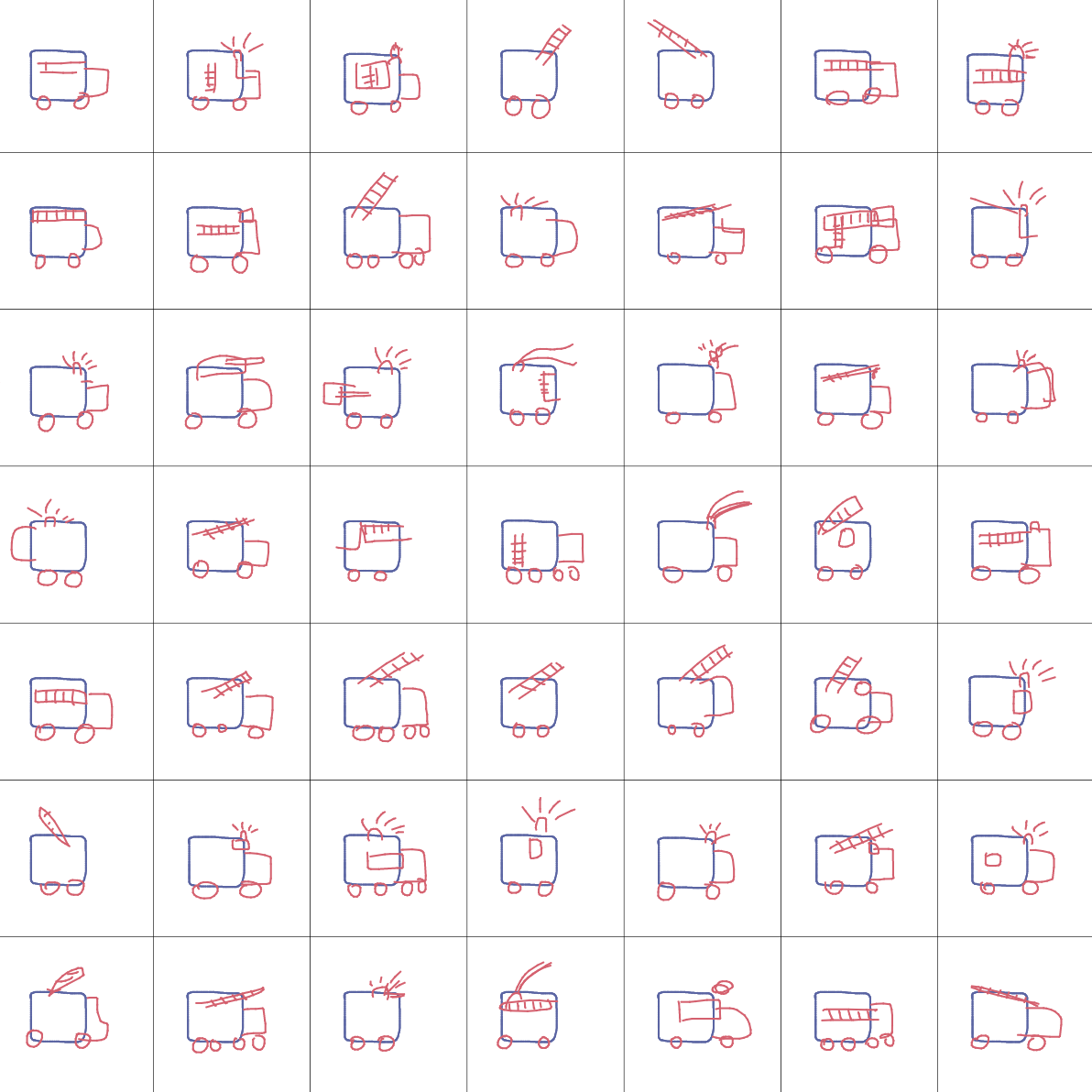

The demo is similar to the first demo that predicts the rest of your drawing. In this version, you will draw the beginning of a sketch inside the area on the left, and the model will predict the rest of the drawing inside the smaller boxes on the right. This way, you can see a variety of different endings predicted by the model. The predicted endings sometimes feel expected, sometimes unexpected and weird, and also can sometimes be hideous and totally wrong.

You can also choose different categories to get the model to draw different objects based on the same incomplete starting sketch, to get the model to draw things like square cats, or circular trucks. You can always interrupt the model and continue working on your drawing inside the area on the left, and have the model continually predict where you left off afterwards.

This is my firetruck. There are many like it, but this one is mine.

Since the model is trained on a dataset of how other people doodle, we also found it interesting to deliberately draw in a way that is different compared to the model’s predictions to help with our own mental search process for novelty, and not conform to the masses. Try the Multi Predict demo.

Interpolation

Interpolation Demo

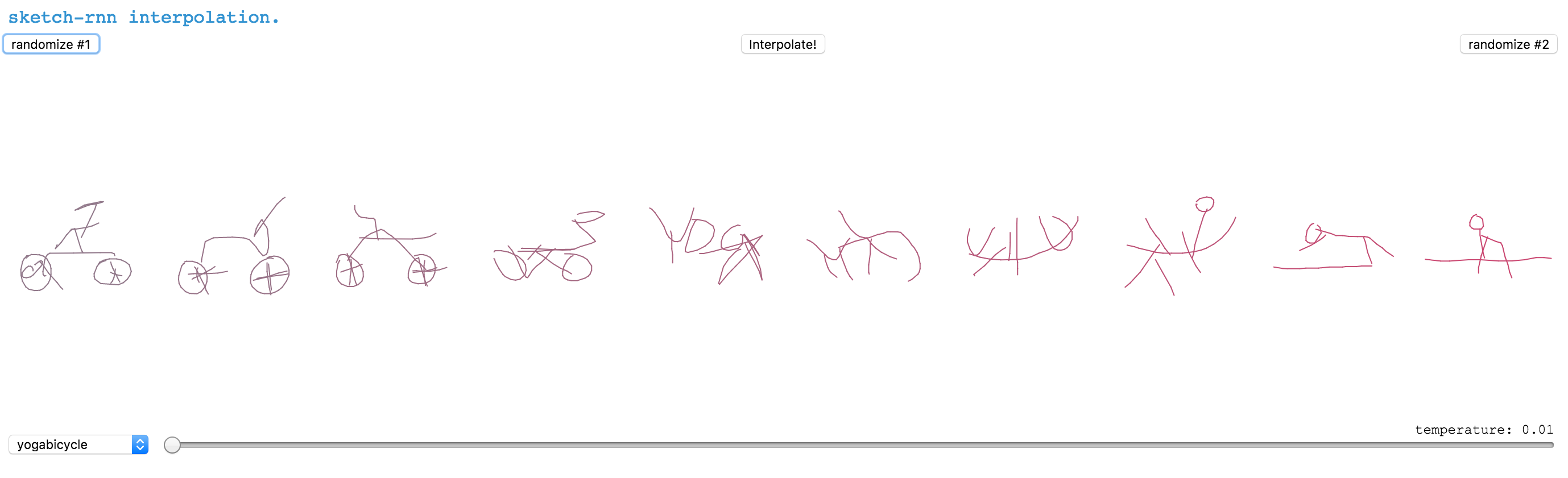

In addition to predicting the rest of an incomplete drawing, sketch-rnn is also able to morph from one drawing to another drawing. In the Interpolation Demo, you can get the model to randomly generate two images using the two randomize buttons on the sides of the screen. After hitting the Interpolate! button in the middle, the model will come up with new drawings that it believes to be the interpolation between the two original drawings. In the image above, the model interpolates between a bicycle and a yoga position. Try the Interpolation demo to morph between two randomly generated images.

Variational Auto-Encoder

Variational Autoencoder Demo

The model can also mimic your drawings and produce similar doodles. In the Variational Autoencoder Demo, you are to draw a complete drawing of a specified object. After you draw a complete sketch inside the area on the left, hit the auto-encode button and the model will start drawing similar sketches inside the smaller boxes on the right. Rather than drawing a perfect duplicate copy of your drawing, the model will try to mimic your drawing instead.

You can experiment drawing objects that are not the category you are supposed to draw, and see how the model interprets your drawing. For example, try to draw a cat, and have a model trained to draw crabs generate cat-like crabs. Try the Variational Autoencoder demo.

Want to learn more?

If you want to learn more about what is going on, here are a few pointers to explore:

-

Google Research blog post about this model.

-

Read our paper A Neural Representation of Sketch Drawings.

-

Earlier Magenta blog post about the TensorFlow implementation of this model. GitHub repo.

-

JavaScript implementation of this model along with pre-trained model weights. GitHub repo.