Sketch-RNN, a generative model for vector drawings, is now available in Magenta. For an overview of the model, see the Google Research blog from April 2017, Teaching Machines to Draw (David Ha). For the technical machine learning details, see the arXiv paper A Neural Representation of Sketch Drawings (David Ha and Douglas Eck).

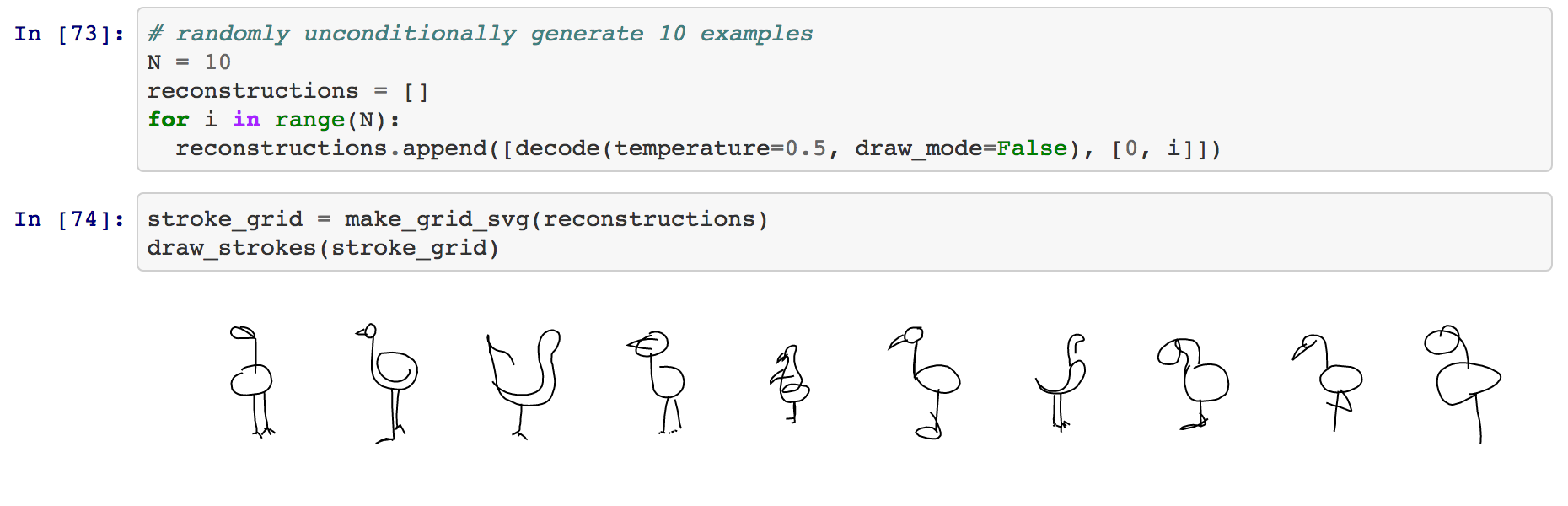

Vector drawings of flamingos from our Jupyter notebook.

To try out Sketch-RNN, visit the Magenta GitHub for instructions. We’ve provided trained models, code for you to train your own models in TensorFlow and a Jupyter notebook tutorial (check it out!)

The code release is timed to coincide with a Google Creative Lab data release. Visit Quick, Draw! The Data for more information. For versions of the data pre-processed to work with Sketch-RNN, please refer to the GitHub repo for more information.

We’ll leave you with a look at yoga poses generated by moving through the learned representation (latent space) of the model trained on yoga drawings. Notice how it gets confused at around 10 seconds when it moves from poses standing towards poses done on a yoga mat. In our arXiv paper A Neural Representation of Sketch Drawings we discuss reasons for this behavior.