Colab Notebooks

Colaboratory is a Google research project created to help disseminate machine learning education and research. It's a Jupyter notebook environment that requires no setup to use and runs entirely in the cloud.

We provide notebooks for several of our models that allow you to interact with them on a hosted Google Cloud instance for free.

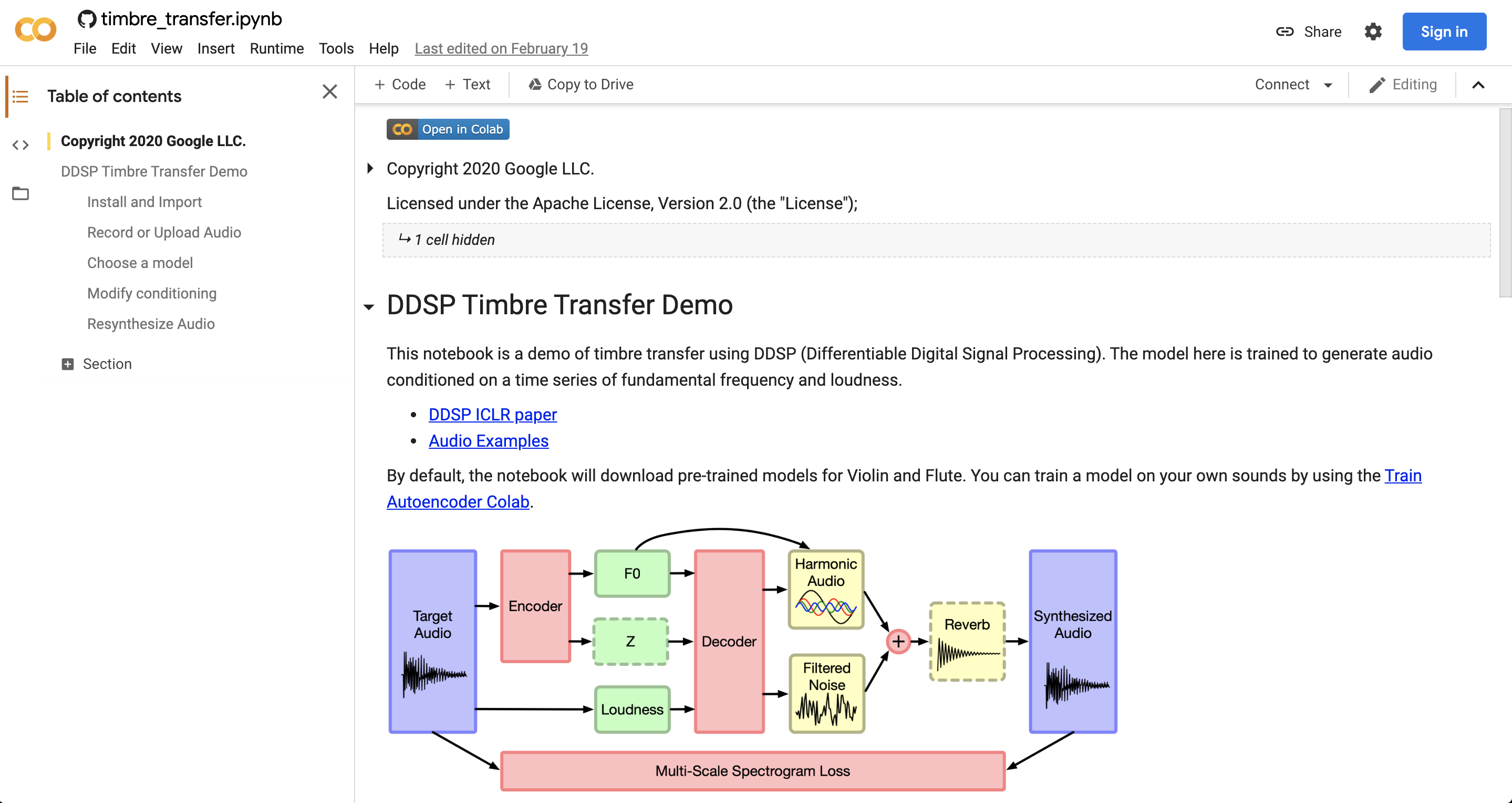

DDSP (Differentiable Digital Signal Processing) enables new interactions with raw audio. This notebook example allows you to convert an input from your microphone into an instrument like a violin or a flute.

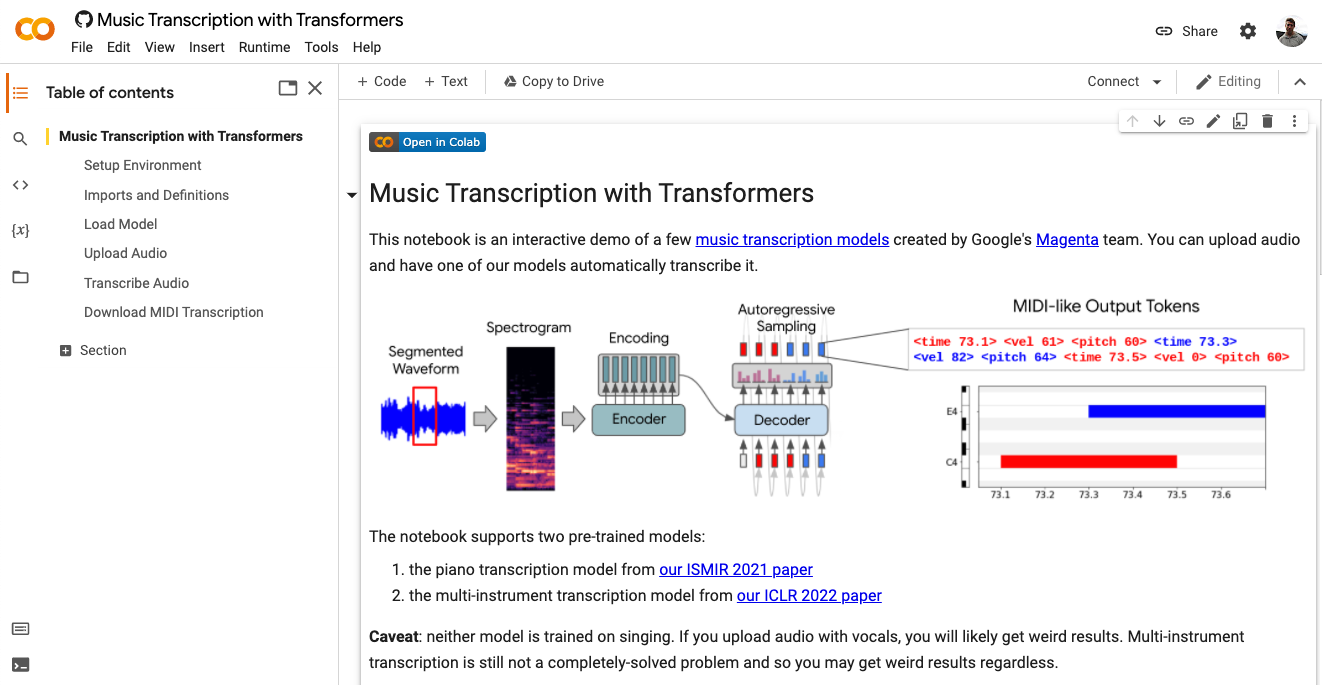

This Colab notebook allows you to upload your own audio and transcribe it with one of a few different Transformer-based models, including the MT3 model for multi-instrument audio.

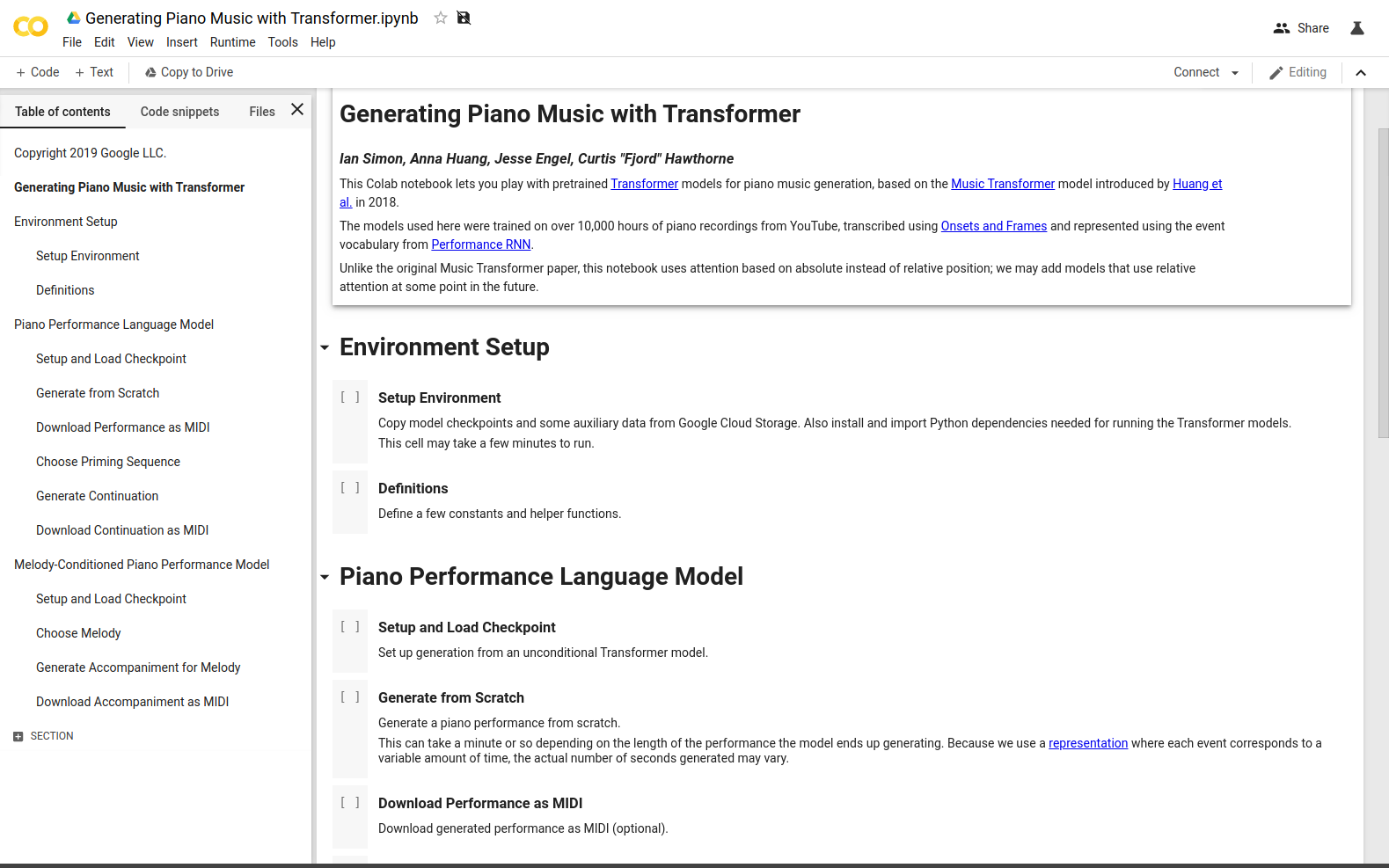

Play with Music Transformer and control the model in a few different ways, or just generate new performances from scratch.

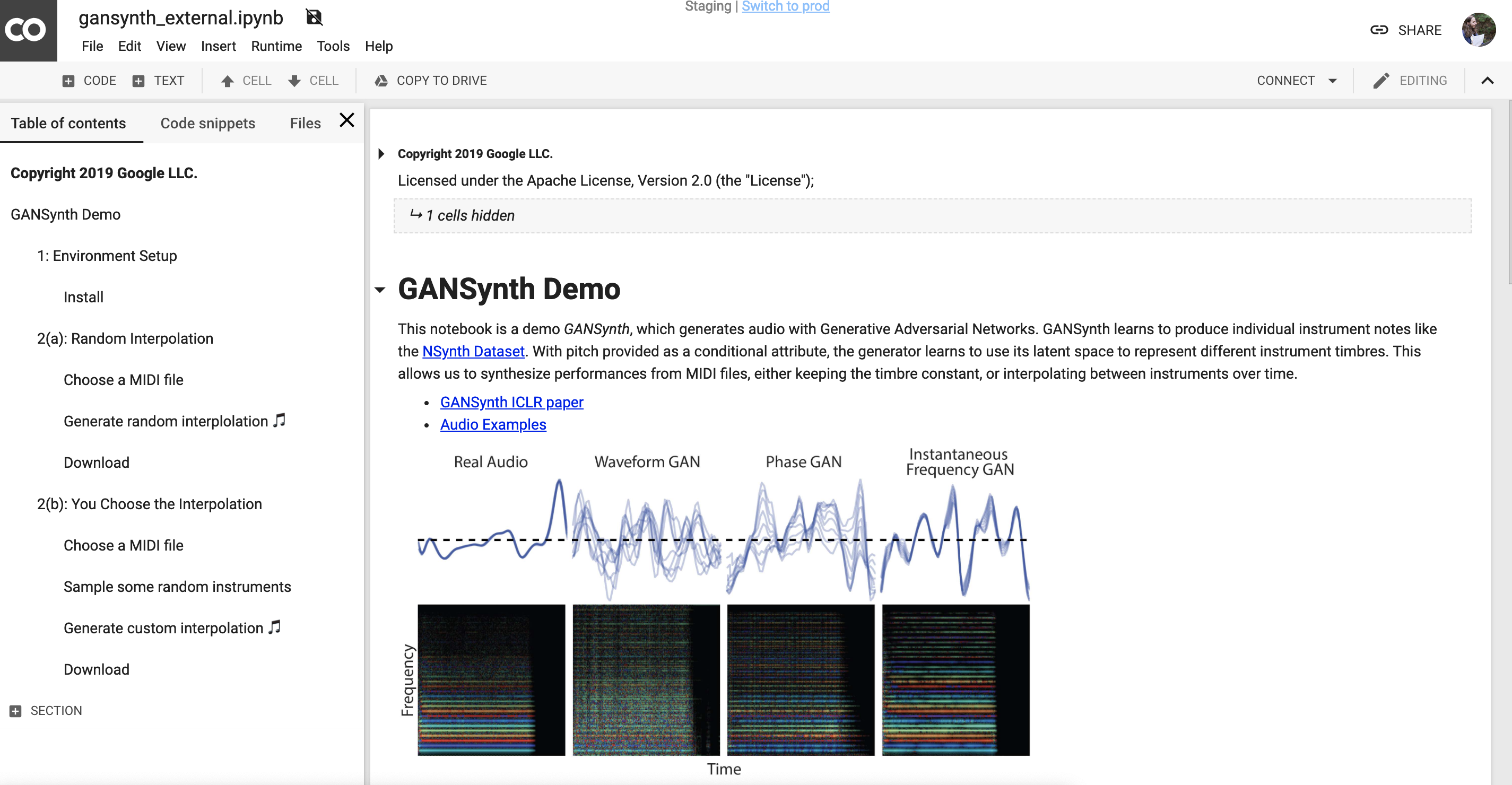

GANSynth generates high-fidelity audio with GANs. Here we generate audio from MIDI while interpolating in timbre space.

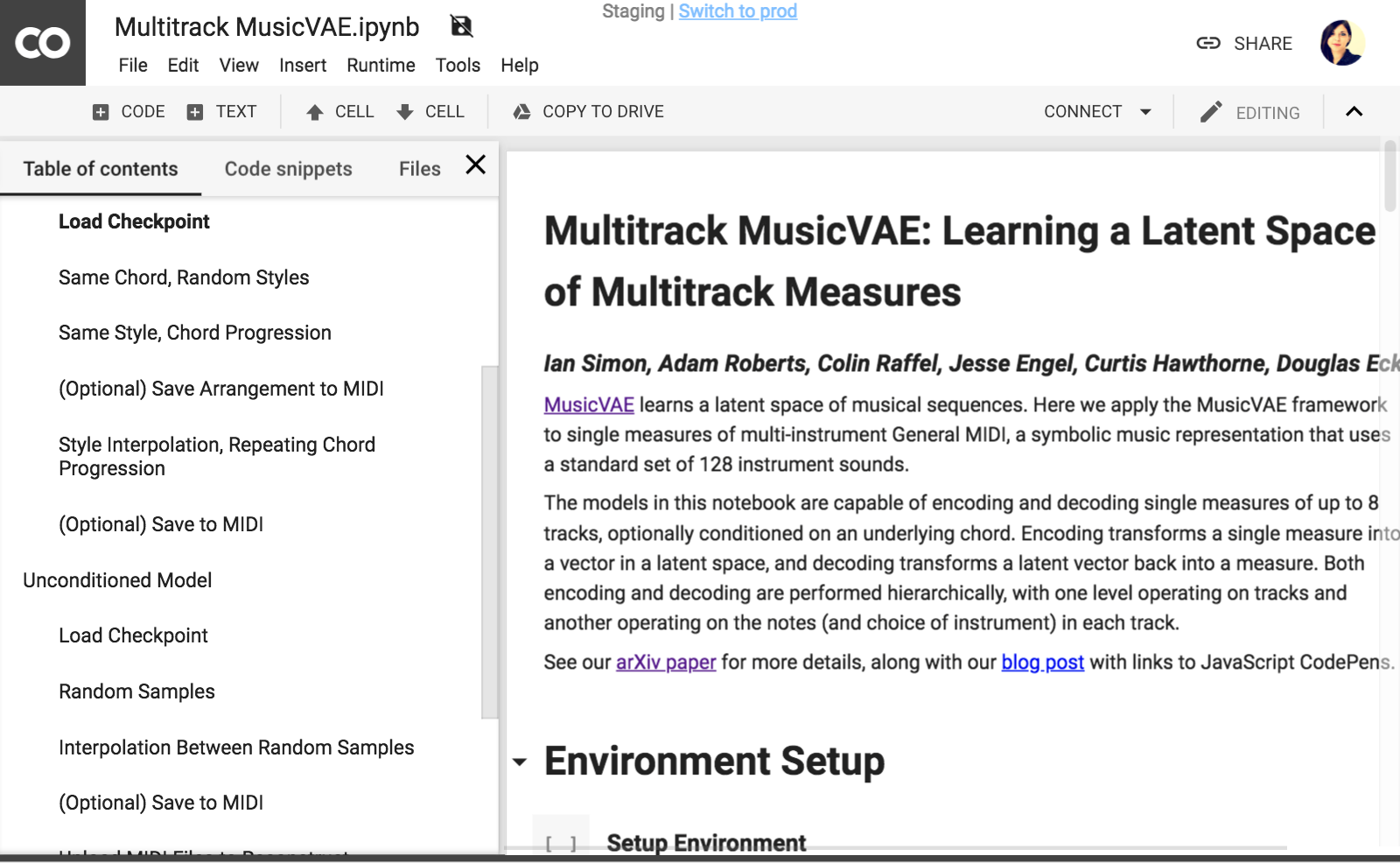

MusicVAE learns a latent space of musical sequences. Here we apply the MusicVAE framework to single measures of multi-instrument General MIDI, a symbolic music representation that uses a standard set of 128 instrument sounds.

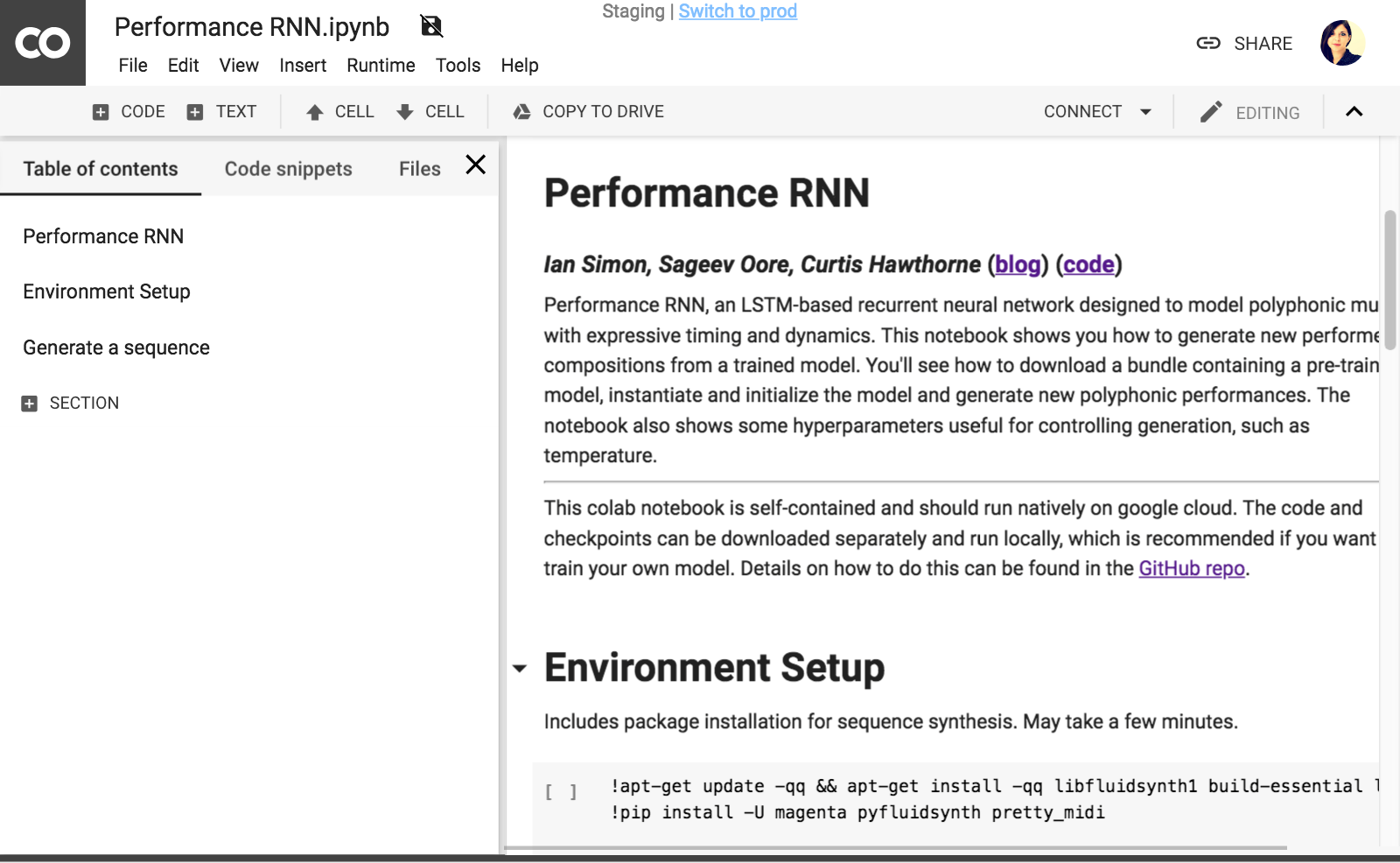

Performance RNN is an LSTM-based recurrent neural network designed to model polyphonic music with expressive timing and dynamics. This notebook shows you how to generate new performed compositions from a trained model.

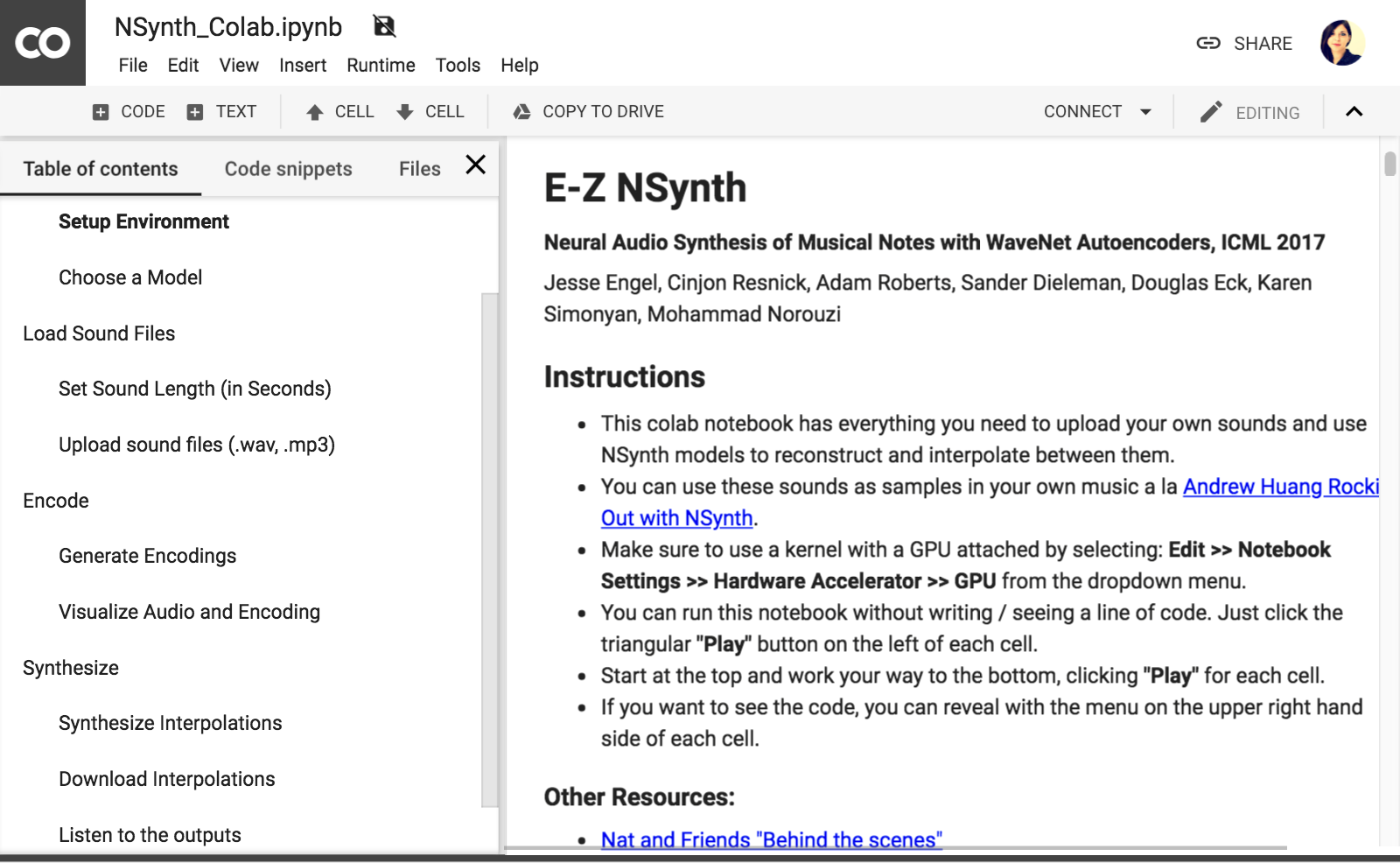

This Colab notebook has everything you need to upload your own sounds and use NSynth models to reconstruct and interpolate between them on a GPU at no cost.

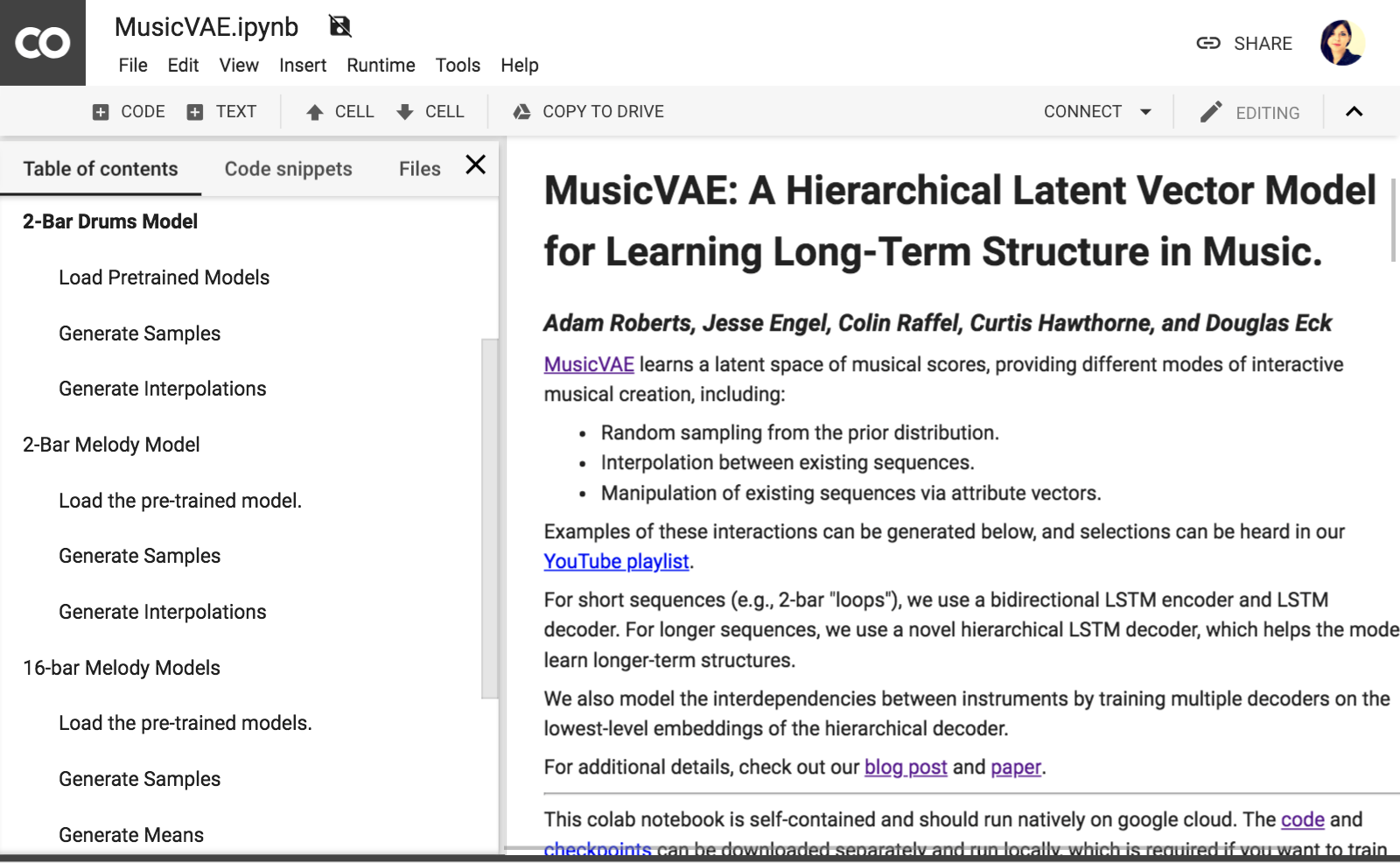

MusicVAE learns a latent space of musical scores. This Colab notebook provides functionality for you to randomly sample from the prior distribution and interpolate between existing sequences for several pre-trained MusicVAE models.

Onsets and Frames is an automatic piano music transcription model. This Colab notebook demonstrates running the model on user-supplied recordings.

Code for running experiments related to Latent Constraints: Conditional Generation from Unconditional Generative Models.