In this post we introduce the GrooVAE class of models (pronounced “groovay”) for generating and controlling expressive drum performances, along with a new dataset to train the model. We can use GrooVAE to add character to stiff electronic drum beats, to come up with drums that match your sense of groove on another instrument (or just tapping on a table), and to explore different ways of playing the same drum pattern.

Here is a GrooVAE generating drums to match the groove of a bassline with the Drumify plugin of Magenta Studio:

You can learn all about the project in our ICML paper, download the dataset and the code for python and magenta.js, and try out GrooVAE in the Colab notebook and the Groove and Drumify plugins in Magenta Studio:

| 🥁 Web App |

Colab Notebook |

Ableton Live Plugin |

🔊 Additional Examples |

| 📝 ICML 2019 Paper |

💾 Groove MIDI Dataset |

🐍 Python Code |

🕸 magenta.js Code |

Making Beats with Machine Learning

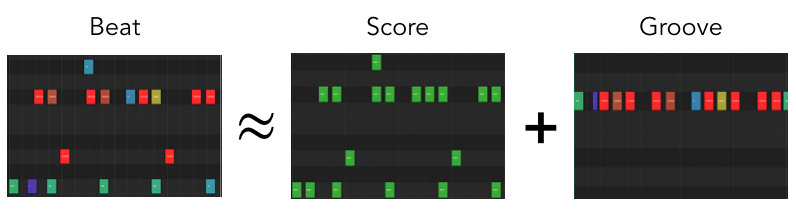

One (simplified) way to think about a beat, whether played live or electronically sequenced, is to break it down into 2 main components:

- the score - which drums are played, as could be written in western music notation, and

- the groove - how the drums are played, via precise dynamics (how hard each drum is struck) and microtiming (stylistic deviation from the exact notated time on a grid).

Given either a score (also called a pattern) or, alternatively, a groove, a good drummer can come up with ways to fill in the other component. From a music production standpoint, however, while it is easy to create the score component in a MIDI editor or the groove component by tapping out or implying a rhythm on any instrument, creating both together is much more difficult, unless you have the technical skills of a drummer (and a readily available drum set for recording).

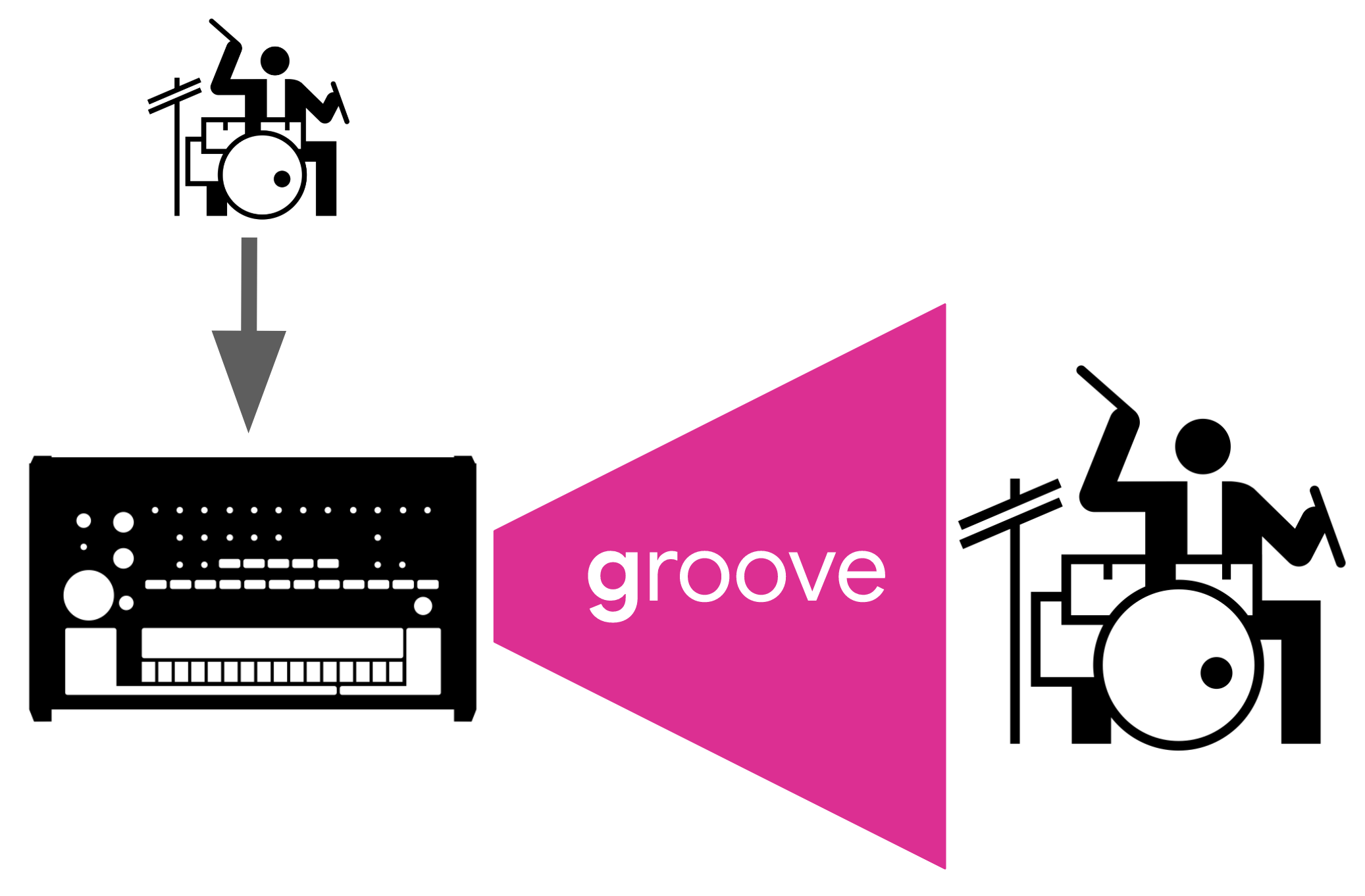

With GrooVAE, we try to address this production challenge by training machine learning models that take in either a drum score or a groove, but not both, then generating a fully realized beat by adding the missing part.

Groove

The Groove model is a GrooVAE that adds groove to a score (similar to “humanization” functions common in Digital Audio Workstations):

| Score | With Generated Groove |

|---|---|

You can add grooves to your own beats (or ones generated by MusicVAE) with this app by Monica Dinculescu using the [magenta.js implementation][http://goo.gl/magenta/musicvae-js].

Drumify

The Drumify model is a GrooVAE that generates a score and velocities to add to any rhythmic groove:

| Tapped Rhythm | Generated Beat |

|---|---|

The Drumify model can work with any rhythmic input, as long as the tempo is consistent, by looking at the timing onsets of any musical instrument. The video below shows this model responding to the timing of a keyboard player, a guitar player, and a bass player with different beats.

How does it work?

Model

The GrooVAE models are variants of the Recurrent Variational Autoencoder (VAE) architecture in the MusicVAE framework, with a few key differences:

- In addition to the MIDI patterns that MusicVAE accepts, we jointly encode the groove of a sequence into the same latent vector by way of training models to reconstruct not just quantized MIDI sequences, but also the velocity and microtiming of each note.

- We mask part of the input (either the score or the groove) from the encoder, optimizing the GrooVAE models to learn to predict what the actual beat was in the training data, given only one part of it. Since the inputs and output are different, our models technically fall under the category of Variational Information Bottleneck (VIB) rather than VAE. We found empirically that the VIB component of the model, which constrains the encoded vectors to lie in a smoother space, helps to keep the generated beats realistic, even when given very different drum patterns from those in the training data. We can see this at work in some of the examples throughout this post, when GrooVAE decides to change things up, for example by adding a few extra snare drums at the end, rather than sticking exactly to the prescribed input.

- We train these models on a new dataset of recordings by professional drummers playing an electronic drum kit that captures MIDI. These drummers recorded beats along with a metronome, so we can model the timing of their playing relative to a defined grid. This also allows us to mask out the groove from the model by quantizing the beats.

The Groove MIDI Dataset

We hired several professional drummers to come to the Google office, and we recorded them playing on a Roland TD-11 electronic drum set in our studio, collecting a total of 13.6 hours of drum recordings. Although there are too many styles out there to try to cover with just a few drummers, we found players with a few different backgrounds including training in jazz, brazilian music, rock, and orchestral percussion. As part of the project, Magenta is releasing the Groove MIDI Dataset, full details and discussion of which can be found on the dataset page and in the paper.

Other Applications

Drum Infilling

There are many other ways to break down drumming besides the division between score and groove, and with our setup and model architecture in place, it’s easy to mask any component of a beat and experiment with training models to fill in the missing parts. One natural way to break down a beat is to separate out the individual instruments within a drum kit. We experimented with Drum Infilling, in which GrooVAE learns to add or replace the part for one piece of the drum kit, conditioning on the remaining parts.

Groove Transfer

Another fun application is one we call Groove Transfer. Here, we alter the model by giving the decoder direct access to the drum score via teacher forcing, encouraging the encoder to ignore the score and focus on embedding information about groove only. This variant of the model opens up creative possibilities for applying any “Groove Embedding” to any other drum pattern that we choose.

While it is not really clear what we should expect from a model like this (what would be the right way to play Straight Ahead Jazz with the groove from Funky Drummer?), pushing this model into confusing territory can make for fun exploration.

Next Steps

The Groove MIDI Dataset, pretrained models, and code are in open source and available for download, so feel free to use the data for research, train GrooVAE models on your own drum playing, or just take the MIDI files and use them as loops in your own songs.

Acknowledgements

None of this work would have been possible without the talent, hard work, and years of training of the drummers that recorded for our dataset.

We’d like to thank the following primary contributors:

- Dillon Vado (of Never Weather)

- Jonathan Fishman (of Phish)

- Michaelle Goerlitz (of Wild Mango)

- Nick Woodbury (of SF Contemporary Music Players)

- Randy Schwartz (of El Duo)

Additional drumming provided by: Jon Gillick, Mikey Steczo, Sam Berman, and Sam Hancock.

Thanks to Mike Tyka for help with the Colab.

Images

Drummer by Luis Prado from the Noun Project.

Drum Machine by Clayton Meador from the Noun Project.