What?

Magenta Studio is a collection of music creativity tools built on Magenta’s open source models, available both as standalone applications and as plugins for Ableton Live. They use cutting-edge machine learning techniques for music generation.

Each of the plugins lets you utilize Magenta.js models directly within Ableton Live. The plugins read and write MIDI from Ableton’s MIDI clips. Or if you don’t have Ableton, you can just use MIDI files from your desktop.

The first release includes 5 apps: Generate, Continue, Interpolate, Groove, and Drumify.

Generate uses MusicVAE to randomly generate 4 bar phrases from its model of melodies and drum patterns learned from hundreds of thousands of songs. Continue can extend a melody or drum pattern you give it, and Interpolate can combine features of your inputs to produce new ideas or create musical transitions between phrases. Groove is like many “humanize” plugins, but it adds human-like timing and velocity to drum parts based on learned models of performances by professional drummers. Drumify is similar to Groove, but it can turn any sequence into an accompanying drum performance.

Given how simple these plugins make it to interact with complex machine learning models, we plan on using this platform to release more Magenta.js tools and models in the future.

You can read more about what these 5 plugins do and try them out yourself at g.co/magenta/studio, but in this blog post we want to focus a bit more on how we created these tools and why we did it in the first place.

Why?

With its rapid advances in recent years, machine learning (building algorithms from data instead of code) is proving to be a transformative technology. In addition to captioning images and driving cars, machine learning can be a great tool for creative expression, but there is currently a large barrier to its use by most artists and musicians both in terms of expertise and access to specialized accelerator hardware like GPUs and TPUs.

We aim to lower this barrier by developing models specifically targeted to the goals of creators, and then developing easy-to-use tools based on these models, a goal that we’ve been working towards for over three years.

In Magenta, we start with fundamental research, applying modern machine-learning techniques to the task of music generation. Thus far, we have been successful in this respect and have presented our research in all of the major machine learning conferences. Along with these papers, we have also released open source TensorFlow implementations of our models in our GitHub repository as well as some datasets to allow others to more easily replicate and build upon our work. Some artists and coders have even successfully used our code to help them create music, although as you can see, the interface to do this has not always been very user friendly:

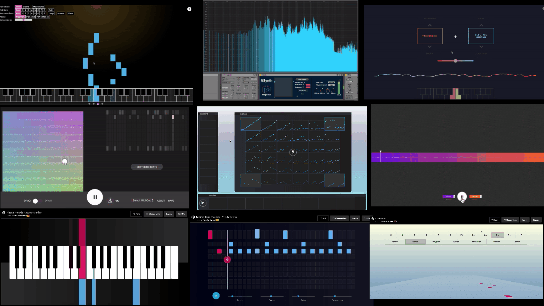

Recently, we have also begun to port these models to TensorFlow.js as part of our Magenta.js package, which allows them to be run efficiently from within a web browser, even on mobile devices. It has also enabled developers such as Tero Parviainen and Catherine McCurry to create much more usable interfaces, like the Neural Drum Machine and Latent Loops, among many others:

With Magenta Studio we wanted to take this technology beyond the command-line and web browser, and bring it to an environment that is already part of the workflow of many musicians and producers: the DAW. We hope that this integration will enable more creators to take advantage of this technology and adapt it to their own uses, while allowing us to learn how we might better focus our future research.

How?

For those interested in the technical details, we wanted to share a bit about how we put these plugins together. If you want to learn more, have a look at the code for yourself. For more background on the specific machine learning models see our research page.

The plugins are built using Electron and Max For Live. Using Electron gives us a few advantages: it allows us to use Tensorflow.js and Magenta.js for GPU-acceleration without requiring users to install any additional tools or GPU drivers. It also provides the ability to develop the interface with familiar tools such as Polymer. Finally, Electron lets us easily create a standalone version of each plugin for people who don’t have Ableton Live.

However, Electron has no way to communicate directly with Ableton Live for reading and writing MIDI clips, so we use Max For Live as an intermediate layer between Live and the Electron plugins. Max recently added the ability to run Node.js with the new Node For Max API, which we use to create a local server. The Electron apps then send messages over the local network to Max, which makes calls via the Live API to do things like find the notes in a clip or create a new clip. It’s somewhat indirect, but because the messages are small and over a local network, it works pretty quickly.

Now What?

The focus of Magenta is not just to build cool new generative models (as much as that does excite us!), but also to find ways to include artists and musicians in the loop and learn from their feedback. So we’d love to hear what you make! Please give Magenta Studio a try, share what you’ve made, and let us know what you think by filling our our short survey for a chance to win a Magenta T-shirt. You can share your creations with #madewithmagenta and also give feedback on the magenta-discuss mailing list. See our community page for other options.

Beyond this initial set of plugins, we have a lot of other interesting models in the pipeline–including polyphonic transcription, transformer piano performances, and synthesis–that we hope to add to the lineup, in addition to our ongoing and future research. In this way, we hope Magenta Studio will be a platform to help us share developments more directly with artists.

We’re especially excited about the opportunity to allow artists to personalize models through local training, for example by applying techniques such as our work on latent constraints. Hopefully, this will enable you to customize your own models to do things that we never designed them to do, so stay tuned!

Who?

Magenta Studio is based on work by members of the Google Brain team’s Magenta project along with contributors to the Magenta and Magenta.js libraries. The plug-ins were implemented by Yotam Mann. Special thanks to Jon Gillick for his work on the GrooVAE model that powers the Groove and Drumify plugins. Special thanks to Signe Nørly and Claire Kayacik for help with design and user testing.

How to cite

If you extend or use this work, please cite the paper where it was introduced:

@inproceedings{magentastudio,

title = {Magenta Studio: Augmenting Creativity with Deep Learning in Ableton Live},

author = {Adam Roberts and Claire Kayacik and Curtis Hawthorne and Douglas Eck and Jesse Engel and Monica Dinculescu and Signe Nørly},

booktitle = {Proceedings of the International Workshop on Musical Metacreation (MUME)}

}